Id

stringlengths 1

6

| PostTypeId

stringclasses 7

values | AcceptedAnswerId

stringlengths 1

6

⌀ | ParentId

stringlengths 1

6

⌀ | Score

stringlengths 1

4

| ViewCount

stringlengths 1

7

⌀ | Body

stringlengths 0

38.7k

| Title

stringlengths 15

150

⌀ | ContentLicense

stringclasses 3

values | FavoriteCount

stringclasses 3

values | CreationDate

stringlengths 23

23

| LastActivityDate

stringlengths 23

23

| LastEditDate

stringlengths 23

23

⌀ | LastEditorUserId

stringlengths 1

6

⌀ | OwnerUserId

stringlengths 1

6

⌀ | Tags

sequence |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

1 | 1 | 15 | null | 49 | 5466 | How should I elicit prior distributions from experts when fitting a Bayesian model?

| Eliciting priors from experts | CC BY-SA 2.5 | null | 2010-07-19T19:12:12.510 | 2020-11-05T09:44:51.710 | null | null | 8 | [

"bayesian",

"prior",

"elicitation"

] |

2 | 1 | 59 | null | 34 | 33671 | In many different statistical methods there is an "assumption of normality". What is "normality" and how do I know if there is normality?

| What is normality? | CC BY-SA 2.5 | null | 2010-07-19T19:12:57.157 | 2022-11-23T13:03:42.033 | 2010-08-07T17:56:44.800 | null | 24 | [

"distributions",

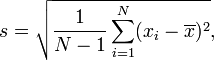

"normality-assumption"

] |

3 | 1 | 5 | null | 71 | 6650 | What are some valuable Statistical Analysis open source projects available right now?

Edit: as pointed out by Sharpie, valuable could mean helping you get things done faster or more cheaply.

| What are some valuable Statistical Analysis open source projects? | CC BY-SA 2.5 | null | 2010-07-19T19:13:28.577 | 2022-11-27T23:33:13.540 | 2011-02-12T05:50:03.667 | 183 | 18 | [

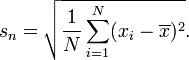

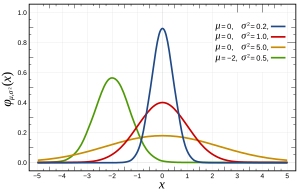

"software",

"open-source"

] |

4 | 1 | 135 | null | 23 | 45871 | I have two groups of data. Each with a different distribution of multiple variables. I'm trying to determine if these two groups' distributions are different in a statistically significant way. I have the data in both raw form and binned up in easier to deal with discrete categories with frequency counts in each.

What tests/procedures/methods should I use to determine whether or not these two groups are significantly different and how do I do that in SAS or R (or Orange)?

| Assessing the significance of differences in distributions | CC BY-SA 2.5 | null | 2010-07-19T19:13:31.617 | 2010-09-08T03:00:19.690 | null | null | 23 | [

"distributions",

"statistical-significance"

] |

5 | 2 | null | 3 | 90 | null | The R-project

[http://www.r-project.org/](http://www.r-project.org/)

R is valuable and significant because it was the first widely-accepted Open-Source alternative to big-box packages. It's mature, well supported, and a standard within many scientific communities.

- Some reasons why it is useful and valuable

- There are some nice tutorials here.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:14:43.050 | 2010-07-19T19:21:15.063 | 2010-07-19T19:21:15.063 | 23 | 23 | null |

6 | 1 | null | null | 486 | 173164 | Last year, I read a blog post from [Brendan O'Connor](http://anyall.org/) entitled ["Statistics vs. Machine Learning, fight!"](http://anyall.org/blog/2008/12/statistics-vs-machine-learning-fight/) that discussed some of the differences between the two fields. [Andrew Gelman responded favorably to this](http://andrewgelman.com/2008/12/machine_learnin/):

Simon Blomberg:

>

From R's fortunes

package: To paraphrase provocatively,

'machine learning is statistics minus

any checking of models and

assumptions'.

-- Brian D. Ripley (about the difference between machine learning

and statistics) useR! 2004, Vienna

(May 2004) :-) Season's Greetings!

Andrew Gelman:

>

In that case, maybe we should get rid

of checking of models and assumptions

more often. Then maybe we'd be able to

solve some of the problems that the

machine learning people can solve but

we can't!

There was also the ["Statistical Modeling: The Two Cultures" paper](http://projecteuclid.org/euclid.ss/1009213726) by Leo Breiman in 2001 which argued that statisticians rely too heavily on data modeling, and that machine learning techniques are making progress by instead relying on the predictive accuracy of models.

Has the statistics field changed over the last decade in response to these critiques? Do the two cultures still exist or has statistics grown to embrace machine learning techniques such as neural networks and support vector machines?

| The Two Cultures: statistics vs. machine learning? | CC BY-SA 3.0 | null | 2010-07-19T19:14:44.080 | 2021-01-19T17:59:15.653 | 2017-04-08T17:58:18.247 | 11887 | 5 | [

"machine-learning",

"pac-learning"

] |

7 | 1 | 18 | null | 103 | 42538 | I've been working on a new method for analyzing and parsing datasets to identify and isolate subgroups of a population without foreknowledge of any subgroup's characteristics. While the method works well enough with artificial data samples (i.e. datasets created specifically for the purpose of identifying and segregating subsets of the population), I'd like to try testing it with live data.

What I'm looking for is a freely available (i.e. non-confidential, non-proprietary) data source. Preferably one containing bimodal or multimodal distributions or being obviously comprised of multiple subsets that cannot be easily pulled apart via traditional means. Where would I go to find such information?

| Locating freely available data samples | CC BY-SA 2.5 | null | 2010-07-19T19:15:59.303 | 2022-11-30T05:37:29.877 | 2013-09-26T21:50:36.963 | 253 | 38 | [

"dataset",

"sample",

"population",

"teaching"

] |

9 | 2 | null | 3 | 15 | null | [Incanter](http://incanter.org/) is a Clojure-based, R-like platform (environment + libraries) for statistical computing and graphics.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:16:27.553 | 2010-07-19T19:16:27.553 | null | null | 50 | null |

10 | 1 | 1887 | null | 63 | 119038 | Many studies in the social sciences use Likert scales. When is it appropriate to use Likert data as ordinal and when is it appropriate to use it as interval data?

| Under what conditions should Likert scales be used as ordinal or interval data? | CC BY-SA 2.5 | null | 2010-07-19T19:17:47.537 | 2021-02-04T18:28:52.953 | 2017-01-26T11:52:53.043 | 28666 | 24 | [

"ordinal-data",

"likert",

"scales",

"measurement"

] |

11 | 1 | 1201 | null | 5 | 355 | Is there a good, modern treatment covering the various methods of multivariate interpolation, including which methodologies are typically best for particular types of problems? I'm interested in a solid statistical treatment including error estimates under various model assumptions.

An example:

[Shepard's method](http://en.wikipedia.org/wiki/Inverse_distance_weighting)

Say we're sampling from a multivariate normal distribution with unknown parameters. What can we say about the standard error of the interpolated estimates?

I was hoping for a pointer to a general survey addressing similar questions for the various types of multivariate interpolations in common use.

| Multivariate Interpolation Approaches | CC BY-SA 2.5 | null | 2010-07-19T19:18:30.810 | 2010-08-03T21:50:09.007 | 2010-07-28T07:58:52.320 | 34 | 34 | [

"multivariable",

"interpolation"

] |

12 | 2 | null | 7 | 24 | null | See my response to ["Datasets for Running Statistical Analysis on"](https://stackoverflow.com/questions/2252144/datasets-for-running-statistical-analysis-on/2252450#2252450) in reference to datasets in R.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:18:41.370 | 2010-07-19T19:18:41.370 | 2017-05-23T12:39:26.143 | -1 | 5 | null |

13 | 2 | null | 6 | 27 | null | Machine Learning seems to have its basis in the pragmatic - a Practical observation or simulation of reality. Even within statistics, mindless "checking of models and assumptions" can lead to discarding methods that are useful.

For example, years ago, the very first commercially available (and working) Bankruptcy model implemented by the credit bureaus was created through a plain old linear regression model targeting a 0-1 outcome. Technically, that's a bad approach, but practically, it worked.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:18:56.800 | 2010-07-19T19:18:56.800 | null | null | 23 | null |

14 | 2 | null | 3 | 6 | null | I second that Jay. Why is R valuable? Here's a short list of reasons. [http://www.inside-r.org/why-use-r](http://www.inside-r.org/why-use-r). Also check out [ggplot2](http://had.co.nz/ggplot2/) - a very nice graphics package for R. Some nice tutorials [here](http://gettinggeneticsdone.blogspot.com/search/label/ggplot2).

| null | CC BY-SA 2.5 | null | 2010-07-19T19:19:03.990 | 2010-07-19T19:19:03.990 | null | null | 36 | null |

15 | 2 | null | 1 | 23 | null | John Cook gives some interesting recommendations. Basically, get percentiles/quantiles (not means or obscure scale parameters!) from the experts, and fit them with the appropriate distribution.

[http://www.johndcook.com/blog/2010/01/31/parameters-from-percentiles/](http://www.johndcook.com/blog/2010/01/31/parameters-from-percentiles/)

| null | CC BY-SA 2.5 | null | 2010-07-19T19:19:46.160 | 2010-07-19T19:19:46.160 | null | null | 6 | null |

16 | 2 | null | 3 | 17 | null | Two projects spring to mind:

- Bugs - taking (some of) the pain out of Bayesian statistics. It allows the user to focus more on the model and a bit less on MCMC.

- Bioconductor - perhaps the most popular statistical tool in Bioinformatics. I know it's a R repository, but there are a large number of people who want to learn R, just for Bioconductor. The number of packages available for cutting edge analysis, make it second to none.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:22:31.190 | 2010-07-19T20:43:02.683 | 2010-07-19T20:43:02.683 | 8 | 8 | null |

17 | 1 | 29 | null | 12 | 1903 | I have four competing models which I use to predict a binary outcome variable (say, employment status after graduating, 1 = employed, 0 = not-employed) for n subjects. A natural metric of model performance is hit rate which is the percentage of correct predictions for each one of the models.

It seems to me that I cannot use ANOVA in this setting as the data violates the assumptions underlying ANOVA. Is there an equivalent procedure I could use instead of ANOVA in the above setting to test for the hypothesis that all four models are equally effective?

| How can I adapt ANOVA for binary data? | CC BY-SA 2.5 | null | 2010-07-19T19:24:12.187 | 2012-01-22T23:34:51.837 | 2012-01-22T23:34:51.837 | 7972 | null | [

"anova",

"chi-squared-test",

"generalized-linear-model"

] |

18 | 2 | null | 7 | 43 | null | Also see the UCI machine learning Data Repository.

[http://archive.ics.uci.edu/ml/](http://archive.ics.uci.edu/ml/)

| null | CC BY-SA 2.5 | null | 2010-07-19T19:24:18.580 | 2010-07-19T19:24:18.580 | null | null | 36 | null |

19 | 2 | null | 7 | 16 | null | [Gapminder](http://www.gapminder.org/data/) has a number (430 at the last look) of datasets, which may or may not be of use to you.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:24:21.327 | 2010-07-19T19:24:21.327 | null | null | 55 | null |

20 | 2 | null | 2 | 3 | null | The assumption of normality assumes your data is normally distributed (the bell curve, or gaussian distribution). You can check this by plotting the data or checking the measures for kurtosis (how sharp the peak is) and skewdness (?) (if more than half the data is on one side of the peak).

| null | CC BY-SA 2.5 | null | 2010-07-19T19:24:35.803 | 2010-07-19T19:24:35.803 | null | null | 37 | null |

21 | 1 | null | null | 6 | 304 | What are some of the ways to forecast demographic census with some validation and calibration techniques?

Some of the concerns:

- Census blocks vary in sizes as rural

areas are a lot larger than condensed

urban areas. Is there a need to account for the area size difference?

- if let's say I have census data

dating back to 4 - 5 census periods,

how far can i forecast it into the

future?

- if some of the census zone change

lightly in boundaries, how can i

account for that change?

- What are the methods to validate

census forecasts? for example, if i

have data for existing 5 census

periods, should I model the first 3

and test it on the latter two? or is

there another way?

- what's the state of practice in

forecasting census data, and what are

some of the state of the art methods?

| Forecasting demographic census | CC BY-SA 2.5 | null | 2010-07-19T19:24:36.303 | 2019-08-04T09:23:05.420 | 2019-08-04T09:23:05.420 | 11887 | 59 | [

"forecasting",

"population",

"demography",

"census"

] |

22 | 1 | null | null | 431 | 276770 | How would you describe in plain English the characteristics that distinguish Bayesian from Frequentist reasoning?

| Bayesian and frequentist reasoning in plain English | CC BY-SA 3.0 | null | 2010-07-19T19:25:39.467 | 2022-10-28T14:15:46.433 | 2011-10-04T07:05:14.067 | 930 | 66 | [

"bayesian",

"frequentist"

] |

23 | 1 | 91 | null | 27 | 105467 | How can I find the PDF (probability density function) of a distribution given the CDF (cumulative distribution function)?

| Finding the PDF given the CDF | CC BY-SA 2.5 | null | 2010-07-19T19:26:04.363 | 2019-11-25T03:17:45.387 | null | null | 69 | [

"distributions",

"density-function",

"cumulative-distribution-function"

] |

24 | 2 | null | 3 | 22 | null | For doing a variety of MCMC tasks in Python, there's [PyMC](https://www.pymc.io/welcome.html), which I've gotten quite a bit of use out of. I haven't run across anything that I can do in BUGS that I can't do in PyMC, and the way you specify models and bring in data seems to be a lot more intuitive to me.

| null | CC BY-SA 4.0 | null | 2010-07-19T19:26:13.693 | 2022-11-27T23:15:56.147 | 2022-11-27T23:15:56.147 | 362671 | 61 | null |

25 | 1 | 32 | null | 10 | 4041 | What modern tools (Windows-based) do you suggest for modeling financial time series?

| Tools for modeling financial time series | CC BY-SA 2.5 | null | 2010-07-19T19:27:13.503 | 2022-12-03T14:50:44.067 | 2010-07-26T23:38:29.430 | 69 | 69 | [

"modeling",

"time-series",

"finance",

"software"

] |

26 | 1 | 61 | null | 38 | 25091 | What is a standard deviation, how is it calculated and what is its use in statistics?

| What is a standard deviation? | CC BY-SA 3.0 | null | 2010-07-19T19:27:43.860 | 2017-11-12T21:26:05.097 | 2017-11-12T21:26:05.097 | 11887 | 75 | [

"standard-deviation"

] |

28 | 2 | null | 3 | 7 | null | [GSL](http://www.gnu.org/software/gsl/) for those of you who wish to program in C / C++ is a valuable resource as it provides several routines for random generators, linear algebra etc. While GSL is primarily available for Linux there are also ports for Windows (See: [this](https://web.archive.org/web/20110817071546/http://gladman.plushost.co.uk/oldsite/computing/gnu_scientific_library.php) and [this](http://david.geldreich.free.fr/dev.html)).

| null | CC BY-SA 4.0 | null | 2010-07-19T19:28:12.830 | 2022-11-27T23:14:52.687 | 2022-11-27T23:14:52.687 | 362671 | null | null |

29 | 2 | null | 17 | 8 | null | Contingency table (chi-square). Also Logistic Regression is your friend - use dummy variables.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:28:15.640 | 2010-07-19T19:28:15.640 | null | null | 36 | null |

30 | 1 | 55 | null | 16 | 1992 | Which methods are used for testing random variate generation algorithms?

| Testing random variate generation algorithms | CC BY-SA 2.5 | null | 2010-07-19T19:28:34.220 | 2022-12-05T08:26:28.510 | 2010-08-25T14:12:54.547 | 919 | 69 | [

"algorithms",

"hypothesis-testing",

"random-variable",

"random-generation"

] |

31 | 1 | null | null | 289 | 545327 | After taking a statistics course and then trying to help fellow students, I noticed one subject that inspires much head-desk banging is interpreting the results of statistical hypothesis tests. It seems that students easily learn how to perform the calculations required by a given test but get hung up on interpreting the results. Many computerized tools report test results in terms of "p values" or "t values".

How would you explain the following points to college students taking their first course in statistics:

- What does a "p-value" mean in relation to the hypothesis being tested? Are there cases when one should be looking for a high p-value or a low p-value?

- What is the relationship between a p-value and a t-value?

| What is the meaning of p values and t values in statistical tests? | CC BY-SA 3.0 | null | 2010-07-19T19:28:44.903 | 2023-01-27T12:12:00.777 | 2019-09-27T13:22:45.057 | 919 | 13 | [

"hypothesis-testing",

"p-value",

"interpretation",

"intuition",

"faq"

] |

32 | 2 | null | 25 | 16 | null | I recommend R (see [the time series view on CRAN](http://cran.r-project.org/web/views/TimeSeries.html)).

Some useful references:

- Econometrics in R, by Grant Farnsworth

- Multivariate time series modelling in R

| null | CC BY-SA 2.5 | null | 2010-07-19T19:29:06.527 | 2010-07-19T19:29:06.527 | 2017-05-23T12:39:26.167 | -1 | 5 | null |

33 | 1 | 49 | null | 5 | 2578 | What R packages should I install for seasonality analysis?

| R packages for seasonality analysis | CC BY-SA 2.5 | null | 2010-07-19T19:30:03.717 | 2022-11-24T14:17:48.967 | 2010-09-16T06:56:44.040 | null | 69 | [

"r",

"seasonality"

] |

35 | 1 | 72 | null | 18 | 5678 | I have a data set that I'd expect to follow a Poisson distribution, but it is overdispersed by about 3-fold. At the present, I'm modelling this overdispersion using something like the following code in R.

```

## assuming a median value of 1500

med = 1500

rawdist = rpois(1000000,med)

oDdist = rawDist + ((rawDist-med)*3)

```

Visually, this seems to fit my empirical data very well. If I'm happy with the fit, is there any reason that I should be doing something more complex, like using a [negative binomial distribution, as described here](http://en.wikipedia.org/wiki/Overdispersion#Poisson)? (If so, any pointers or links on doing so would be much appreciated).

Oh, and I'm aware that this creates a slightly jagged distribution (due to the multiplication by three), but that shouldn't matter for my application.

---

Update: For the sake of anyone else who searches and finds this question, here's a simple R function to model an overdispersed poisson using a negative binomial distribution. Set d to the desired mean/variance ratio:

```

rpois.od<-function (n, lambda,d=1) {

if (d==1)

rpois(n, lambda)

else

rnbinom(n, size=(lambda/(d-1)), mu=lambda)

}

```

(via the R mailing list: [https://stat.ethz.ch/pipermail/r-help/2002-June/022425.html](https://stat.ethz.ch/pipermail/r-help/2002-June/022425.html))

| Modelling a Poisson distribution with overdispersion | CC BY-SA 2.5 | null | 2010-07-19T19:30:30.390 | 2010-07-25T01:44:14.513 | 2010-07-25T01:44:14.513 | 54 | 54 | [

"distributions",

"modeling",

"poisson-distribution",

"overdispersion"

] |

36 | 1 | null | null | 83 | 389933 | There is an old saying: "Correlation does not mean causation". When I teach, I tend to use the following standard examples to illustrate this point:

- number of storks and birth rate in Denmark;

- number of priests in America and alcoholism;

- in the start of the 20th century it was noted that there was a strong correlation between 'Number of radios' and 'Number of people in Insane Asylums'

- and my favorite: pirates cause global warming.

However, I do not have any references for these examples and whilst amusing, they are obviously false.

Does anyone have any other good examples?

| Examples for teaching: Correlation does not mean causation | CC BY-SA 3.0 | null | 2010-07-19T19:31:47.363 | 2019-08-23T10:09:53.320 | 2014-05-23T06:31:12.967 | 43889 | 8 | [

"correlation",

"teaching"

] |

38 | 2 | null | 35 | 4 | null | If your mean value for the Poisson is 1500, then you're very close to a normal distribution; you might try using that as an approximation and then modelling the mean and variance separately.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:32:28.063 | 2010-07-19T19:32:28.063 | null | null | 61 | null |

39 | 1 | 721 | null | 7 | 246 | I'm looking for worked out solutions using Bayesian and/or logit analysis similar to a workbook or an annal.

The worked out problems could be of any field; however, I'm interested in urban planning / transportation related fields.

| Sample problems on logit modeling and Bayesian methods | CC BY-SA 2.5 | null | 2010-07-19T19:32:29.513 | 2010-07-27T05:40:44.950 | 2010-07-27T04:57:47.780 | 190 | 59 | [

"modeling",

"bayesian",

"logit",

"transportation"

] |

40 | 1 | 111 | null | 14 | 1003 | What algorithms are used in modern and good-quality random number generators?

| Pseudo-random number generation algorithms | CC BY-SA 2.5 | null | 2010-07-19T19:32:47.750 | 2014-01-28T07:46:08.953 | 2010-08-25T14:13:48.740 | 919 | 69 | [

"algorithms",

"random-variable",

"random-generation"

] |

41 | 2 | null | 26 | 12 | null | A quote from [Wikipedia](http://en.wikipedia.org/wiki/Standard_deviation).

>

It shows how much variation there is from the "average" (mean, or expected/budgeted value). A low standard deviation indicates that the data points tend to be very close to the mean, whereas high standard deviation indicates that the data is spread out over a large range of values.

| null | CC BY-SA 3.0 | null | 2010-07-19T19:33:13.037 | 2015-06-17T08:59:36.287 | 2015-06-17T08:59:36.287 | -1 | 83 | null |

42 | 2 | null | 3 | 15 | null | [Weka](http://www.cs.waikato.ac.nz/ml/weka) for data mining - contains many classification and clustering algorithms in Java.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:33:19.073 | 2010-07-19T19:33:19.073 | null | null | 80 | null |

43 | 2 | null | 25 | 7 | null | R is great, but I wouldn't really call it "windows based" :) That's like saying the cmd prompt is windows based. I guess it is technically in a window...

RapidMiner is far easier to use [1]. It's a free, open-source, multi-platform, GUI. Here's a video on time series forecasting:

[Financial Time Series Modelling - Part 1](https://web.archive.org/web/20130522020008/http://rapidminerresources.com/index.php?page=financial-time-series-modelling---part-1)

Also, don't forget to read:

[Forecasting Methods and Principles](https://www.researchgate.net/project/Forecasting-Methods-and-Principles-forecastingprinciplescom-forprincom)

[1] No, I don't work for them.

| null | CC BY-SA 4.0 | null | 2010-07-19T19:33:37.497 | 2022-12-03T14:50:44.067 | 2022-12-03T14:50:44.067 | 362671 | 74 | null |

44 | 1 | 89 | null | 9 | 778 | How would you explain data visualization and why it is important to a layman?

| Explain data visualization | CC BY-SA 2.5 | null | 2010-07-19T19:34:42.643 | 2013-07-27T00:28:49.377 | 2013-07-27T00:28:49.377 | 12786 | 68 | [

"data-visualization",

"intuition"

] |

45 | 2 | null | 40 | 6 | null | The [Mersenne Twister](http://en.wikipedia.org/wiki/Mersenne_twister) is one I've come across and used before now.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:34:44.033 | 2010-07-19T19:34:44.033 | null | null | 55 | null |

46 | 2 | null | 26 | 1 | null | A standard deviation is the square root of the second central moment of a distribution. A central moment is the expected difference from the expected value of the distribution. A first central moment would usually be 0, so we define a second central moment as the expected value of the squared distance of a random variable from its expected value.

To put it on a scale that is more in line with the original observations, we take the square root of that second central moment and call it the standard deviation.

Standard deviation is a property of a population. It measures how much average "dispersion" there is to that population. Are all the obsrvations clustered around the mean, or are they widely spread out?

To estimate the standard deviation of a population, we often calculate the standard deviation of a "sample" from that population. To do this, you take observations from that population, calculate a mean of those observations, and then calculate the square root of the average squared deviation from that "sample mean".

To get an unbiased estimator of the variance, you don't actually calculate the average squared deviation from the sample mean, but instead, you divide by (N-1) where N is the number of observations in your sample. Note that this "sample standard deviation" is not an unbiased estimator of the standard deviation, but the square of the "sample standard deviation" is an unbiased estimator of the variance of the population.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:35:04.827 | 2010-07-20T02:13:12.733 | 2010-07-20T02:13:12.733 | 62 | 62 | null |

47 | 1 | 268 | null | 9 | 1102 | I have a dataset of 130k internet users characterized by 4 variables describing users' number of sessions, locations visited, avg data download and session time aggregated from four months of activity.

Dataset is very heavy-tailed. For example third of users logged only once during four months, whereas six users had more than 1000 sessions.

I wanted to come up with a simple classification of users, preferably with indication of the most appropriate number of clusters.

Is there anything you could recommend as a solution?

| Clustering of large, heavy-tailed dataset | CC BY-SA 4.0 | null | 2010-07-19T19:36:12.140 | 2020-05-13T03:35:50.177 | 2020-05-13T03:35:50.177 | 11887 | 22 | [

"clustering",

"large-data",

"kurtosis"

] |

49 | 2 | null | 33 | 7 | null | You don't need to install any packages because this is possible with base-R functions. Have a look at [the arima function](http://www.stat.ucl.ac.be/ISdidactique/Rhelp/library/ts/html/arima.html).

This is a basic function of [Box-Jenkins analysis](http://en.wikipedia.org/wiki/Box%E2%80%93Jenkins), so you should consider reading one of the R time series text-books for an overview; my favorite is Shumway and Stoffer. "[Time Series Analysis and Its Applications: With R Examples](http://rads.stackoverflow.com/amzn/click/0387293175)".

| null | CC BY-SA 2.5 | null | 2010-07-19T19:36:52.403 | 2010-07-19T19:36:52.403 | null | null | 5 | null |

50 | 1 | 85 | null | 103 | 24363 | What do they mean when they say "random variable"?

| What is meant by a "random variable"? | CC BY-SA 2.5 | null | 2010-07-19T19:37:31.873 | 2023-01-02T16:45:45.633 | 2017-04-15T16:49:34.860 | 11887 | 62 | [

"mathematical-statistics",

"random-variable",

"intuition",

"definition"

] |

51 | 1 | 64 | null | 5 | 232 | Are there any objective methods of assessment or standardized tests available to measure the effectiveness of a software that does pattern recognition?

| Measuring the effectiveness of a pattern recognition software | CC BY-SA 2.5 | null | 2010-07-19T19:37:55.630 | 2010-07-19T20:12:16.453 | null | null | 68 | [

"pattern-recognition"

] |

53 | 1 | 78 | null | 207 | 193152 | What are the main differences between performing principal component analysis (PCA) on the correlation matrix and on the covariance matrix? Do they give the same results?

| PCA on correlation or covariance? | CC BY-SA 3.0 | null | 2010-07-19T19:39:08.483 | 2022-04-09T04:48:09.847 | 2018-07-16T19:41:56.540 | 3277 | 17 | [

"correlation",

"pca",

"covariance",

"factor-analysis"

] |

54 | 1 | 65 | null | 18 | 1072 | As I understand UK Schools teach that the Standard Deviation is found using:

whereas US Schools teach:

(at a basic level anyway).

This has caused a number of my students problems in the past as they have searched on the Internet, but found the wrong explanation.

Why the difference?

With simple datasets say 10 values, what degree of error will there be if the wrong method is applied (eg in an exam)?

| Why do US and UK Schools Teach Different methods of Calculating the Standard Deviation? | CC BY-SA 2.5 | null | 2010-07-19T19:41:19.367 | 2020-01-13T00:55:13.780 | 2017-03-09T17:30:35.957 | -1 | 55 | [

"standard-deviation",

"error",

"teaching",

"unbiased-estimator"

] |

55 | 2 | null | 30 | 12 | null | The [Diehard Test Suite](http://en.wikipedia.org/wiki/Diehard_tests) is something close to a Golden Standard for testing random number generators. It includes a number of tests where a good random number generator should produce result distributed according to some know distribution against which the outcome using the tested generator can then be compared.

EDIT

I have to update this since I was not exactly right:

Diehard might still be used a lot, but it is no longer maintained and not state-of-the-art anymore. NIST has come up with [a set of improved tests](http://csrc.nist.gov/groups/ST/toolkit/rng/index.html) since.

| null | CC BY-SA 3.0 | null | 2010-07-19T19:41:39.880 | 2011-05-12T18:38:27.547 | 2011-05-12T18:38:27.547 | 56 | 56 | null |

56 | 2 | null | 22 | 261 | null | Here is how I would explain the basic difference to my grandma:

I have misplaced my phone somewhere in the home. I can use the phone locator on the base of the instrument to locate the phone and when I press the phone locator the phone starts beeping.

Problem: Which area of my home should I search?

## Frequentist Reasoning

I can hear the phone beeping. I also have a mental model which helps me identify the area from which the sound is coming. Therefore, upon hearing the beep, I infer the area of my home I must search to locate the phone.

## Bayesian Reasoning

I can hear the phone beeping. Now, apart from a mental model which helps me identify the area from which the sound is coming from, I also know the locations where I have misplaced the phone in the past. So, I combine my inferences using the beeps and my prior information about the locations I have misplaced the phone in the past to identify an area I must search to locate the phone.

| null | CC BY-SA 3.0 | null | 2010-07-19T19:42:28.040 | 2016-01-16T19:14:26.703 | 2016-01-16T19:14:26.703 | 100906 | null | null |

57 | 2 | null | 50 | 5 | null | From [Wikipedia](http://en.wikipedia.org/wiki/Random_variable):

>

In mathematics (especially probability

theory and statistics), a random

variable (or stochastic variable) is

(in general) a measurable function

that maps a probability space into a

measurable space. Random variables

mapping all possible outcomes of an

event into the real numbers are

frequently studied in elementary

statistics and used in the sciences to

make predictions based on data

obtained from scientific experiments.

In addition to scientific

applications, random variables were

developed for the analysis of games of

chance and stochastic events. The

utility of random variables comes from

their ability to capture only the

mathematical properties necessary to

answer probabilistic questions.

From [cnx.org](http://cnx.org/content/m13418/latest/):

>

A random variable is a function, which assigns unique numerical values to all possible

outcomes of a random experiment under fixed conditions. A random variable is not a

variable but rather a function that maps events to numbers.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:42:34.670 | 2010-07-19T19:47:54.623 | 2010-07-19T19:47:54.623 | 69 | 69 | null |

58 | 1 | 6988 | null | 14 | 2857 | What is the back-propagation algorithm and how does it work?

| Can someone please explain the back-propagation algorithm? | CC BY-SA 2.5 | null | 2010-07-19T19:42:57.990 | 2013-04-10T12:09:31.350 | 2011-04-29T00:38:41.203 | 3911 | 68 | [

"algorithms",

"optimization",

"neural-networks"

] |

59 | 2 | null | 2 | 29 | null | The assumption of normality is just the supposition that the underlying [random variable](http://en.wikipedia.org/wiki/Random_variable) of interest is distributed [normally](http://en.wikipedia.org/wiki/Normal_distribution), or approximately so. Intuitively, normality may be understood as the result of the sum of a large number of independent random events.

More specifically, normal distributions are defined by the following function:

$$ f(x) =\frac{1}{\sqrt{2\pi\sigma^2}}e^{ -\frac{(x-\mu)^2}{2\sigma^2} },$$

where $\mu$ and $\sigma^2$ are the mean and the variance, respectively, and which appears as follows:

This can be checked in [multiple ways](http://en.wikipedia.org/wiki/Normality_test), that may be more or less suited to your problem by its features, such as the size of n. Basically, they all test for features expected if the distribution were normal (e.g. expected [quantile distribution](http://en.wikipedia.org/wiki/Q-Q_plot)).

| null | CC BY-SA 4.0 | null | 2010-07-19T19:43:20.423 | 2022-11-23T12:58:30.760 | 2022-11-23T12:58:30.760 | 362671 | 39 | null |

60 | 2 | null | 47 | 1 | null | [K-Means clustering](http://en.wikipedia.org/wiki/K-means_algorithm) should work well for this type of problem. However, it does require that you specify the number of clusters in advance.

Given the nature of this data, however, you may be able to work with a [hierarchical clustering algorithm](http://en.wikipedia.org/wiki/Cluster_analysis#Hierarchical_clustering) instead. Since all 4 variables are most likely fairly highly correlated, you can most likely break out clusters, and stop when you reach a small enough distance between clusters. This may be a much simpler approach in this specific case, and allows you to determine "how many clusters" by just stopping as soon as you've broken your set into fine enough clusters.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:43:20.720 | 2010-07-19T19:43:20.720 | null | null | 41 | null |

61 | 2 | null | 26 | 38 | null | Standard deviation is a number that represents the "spread" or "dispersion" of a set of data. There are other measures for spread, such as range and variance.

Here are some example sets of data, and their standard deviations:

```

[1,1,1] standard deviation = 0 (there's no spread)

[-1,1,3] standard deviation = 1.6 (some spread)

[-99,1,101] standard deviation = 82 (big spead)

```

The above data sets have the same mean.

Deviation means "distance from the mean".

"Standard" here means "standardized", meaning the standard deviation and mean are in the same units, unlike variance.

For example, if the mean height is 2 meters, the standard deviation might be 0.3 meters, whereas the variance would be 0.09 meters squared.

It is convenient to know that [at least 75%](http://en.wikipedia.org/wiki/Chebyshev%27s_inequality) of the data points always lie within 2 standard deviations of the mean (or [around 95%](http://en.wikipedia.org/wiki/68-95-99.7_rule) if the distribution is Normal).

For example, if the mean is 100, and the standard deviation is 15, then at least 75% of the values are between 70 and 130.

If the distribution happens to be Normal, then 95% of the values are between 70 and 130.

Generally speaking, IQ test scores are normally distributed and have an average of 100. Someone who is "very bright" is two standard deviations above the mean, meaning an IQ test score of 130.

| null | CC BY-SA 3.0 | null | 2010-07-19T19:44:35.037 | 2013-01-23T09:37:19.680 | 2013-01-23T09:37:19.680 | 74 | 74 | null |

62 | 1 | 80 | null | 8 | 267 | With the recent FIFA world cup, I decided to have some fun and determine which months produced world cup football players. Turned out, most footballers in the 2010 world cup were born in the first half of the year.

Someone pointed out, that children born in the first half of the year had a physical advantage over others and hence "survivorship bias" was involved in the equation. Is this an accurate observation? Can someone please explain why he says that?

Also, when trying to understand the concept, I found most examples revolved around the financial sector. Are they any other everyday life examples explaining it?

| A case of survivorship bias? | CC BY-SA 4.0 | null | 2010-07-19T19:44:58.033 | 2022-02-11T14:03:16.890 | 2022-02-11T14:03:16.890 | 11887 | 58 | [

"bias"

] |

63 | 2 | null | 36 | 43 | null | It might be useful to explain that "causes" is an asymmetric relation (X causes Y is different from Y causes X), whereas "is correlated with" is a symmetric relation.

For instance, homeless population and crime rate might be correlated, in that both tend to be high or low in the same locations. It is equally valid to say that homelesss population is correlated with crime rate, or crime rate is correlated with homeless population. To say that crime causes homelessness, or homeless populations cause crime are different statements. And correlation does not imply that either is true. For instance, the underlying cause could be a 3rd variable such as drug abuse, or unemployment.

The mathematics of statistics is not good at identifying underlying causes, which requires some other form of judgement.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:45:19.780 | 2010-07-19T19:45:19.780 | null | null | 87 | null |

64 | 2 | null | 51 | 8 | null | Yes, there are many methods. You would need to specify which model you're using, because it can vary.

For instance, Some models will be compared based on the [AIC](http://en.wikipedia.org/wiki/Akaike_information_criterion) or [BIC](http://en.wikipedia.org/wiki/Bayesian_information_criterion) criteria. In other cases, one would look at the [MSE from cross validation](http://en.wikipedia.org/wiki/Cross-validation_%28statistics%29) (as, for instance, with a support vector machine).

- I recommend reading Pattern Recognition and Machine Learning by Christopher Bishop.

- This is also discussed in Chapter 5 on Credibility, and particularly section 5.5 "Comparing data mining methods" of Data Mining: Practical Machine Learning Tools and Techniques by Witten and Frank (which discusses Weka in detail).

- Lastly, you should also have a look at The Elements of Statistical Learning by Hastie, Tibshirani and Friedman which is available for free online.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:46:08.217 | 2010-07-19T20:12:16.453 | 2010-07-19T20:12:16.453 | 5 | 5 | null |

65 | 2 | null | 54 | 20 | null | The first formula is the population standard deviation and the second formula is the the sample standard deviation. The second formula is also related to the unbiased estimator of the variance - see [wikipedia](http://en.wikipedia.org/wiki/Variance#Population_variance_and_sample_variance) for further details.

I suppose (here) in the UK they don't make the distinction between sample and population at high school. They certainly don't touch concepts such as biased estimators.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:46:11.383 | 2010-07-19T20:44:53.427 | 2010-07-19T20:44:53.427 | 8 | 8 | null |

66 | 2 | null | 54 | 6 | null | This is [Bessel's Correction](http://en.wikipedia.org/wiki/Bessel%27s_correction). The US version is showing the formula for the sample standard deviation, where the UK version above is the standard deviation of the sample.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:46:11.993 | 2010-07-19T19:46:11.993 | null | null | 41 | null |

67 | 2 | null | 44 | 2 | null | From [Wikipedia](http://en.wikipedia.org/wiki/Data_visualization): Data visualization is the study of the visual representation of data, meaning "information which has been abstracted in some schematic form, including attributes or variables for the units of information"

Data viz is important for visualizing trends in data, telling a story - See [Minard's map of Napoleon's march](http://www.edwardtufte.com/tufte/posters) - possibly one of the best data graphics ever printed.

Also see any of Edward Tufte's books - especially [Visual Display of Quantitative Information.](http://rads.stackoverflow.com/amzn/click/0961392142)

| null | CC BY-SA 2.5 | null | 2010-07-19T19:47:16.577 | 2010-07-19T19:47:16.577 | null | null | 36 | null |

69 | 2 | null | 54 | 3 | null | Since N is the number of points in the data set, one could argue that by calculating the mean one has reduced the degree of freedom in the data set by one (since one introduced a dependency into the data set), so one should use N-1 when estimating the standard deviation from a data set for which one had to estimate the mean before.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:47:49.587 | 2010-07-19T19:47:49.587 | null | null | 56 | null |

70 | 2 | null | 7 | 19 | null | [World Bank](http://data.worldbank.org/data-catalog) offers quite a lot of interesting data and has been recently very active in developing nice [API](http://data.worldbank.org/developers/api-overview) for it.

Also, [commugrate](http://www.cs.purdue.edu/commugrate/data_access/all_data_sets.php) project has an interesting list available.

For US health related data head for [Health Indicators Warehouse](http://www.healthindicators.gov/).

Daniel Lemire's blog [points](http://lemire.me/blog/archives/2012/03/27/publicly-available-large-data-sets-for-database-research/) to few interesting examples (mostly tailored towards DB research) including Canadian Census 1880 and synoptic cloud reports.

And as for today (03/04/2012) [US 1940 census records](http://1940census.archives.gov/) are also available to download.

| null | CC BY-SA 3.0 | null | 2010-07-19T19:48:45.853 | 2012-04-03T09:51:07.860 | 2012-04-03T09:51:07.860 | 22 | 22 | null |

71 | 2 | null | 58 | 3 | null | It's an algorithm for training feedforward multilayer neural networks (multilayer perceptrons). There are several nice java applets around the web that illustrate what's happening, like this one: [http://neuron.eng.wayne.edu/bpFunctionApprox/bpFunctionApprox.html](http://neuron.eng.wayne.edu/bpFunctionApprox/bpFunctionApprox.html). Also, [Bishop's book on NNs](http://rads.stackoverflow.com/amzn/click/0198538642) is the standard desk reference for anything to do with NNs.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:50:33.480 | 2010-07-19T19:50:33.480 | null | null | 36 | null |

72 | 2 | null | 35 | 11 | null | for overdispersed poisson, use the negative binomial, which allows you to parameterize the variance as a function of the mean precisely. rnbinom(), etc. in R.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:51:05.117 | 2010-07-19T19:51:05.117 | null | null | 96 | null |

73 | 1 | 104362 | null | 28 | 11307 | Duplicate thread: [I just installed the latest version of R. What packages should I obtain?](https://stats.stackexchange.com/questions/1676/i-just-installed-the-latest-version-of-r-what-packages-should-i-obtain)

What are the [R](http://www.r-project.org/) packages you couldn't imagine your daily work with data?

Please list both general and specific tools.

UPDATE:

As for 24.10.10 `ggplot2` seems to be the winer with 7 votes.

Other packages mentioned more than one are:

- plyr - 4

- RODBC, RMySQL - 4

- sqldf - 3

- lattice - 2

- zoo - 2

- Hmisc/rms - 2

- Rcurl - 2

- XML - 2

Thanks all for your answers!

| What R packages do you find most useful in your daily work? | CC BY-SA 3.0 | null | 2010-07-19T19:51:32.587 | 2015-08-20T09:00:50.943 | 2017-04-13T12:44:54.643 | -1 | 22 | [

"r"

] |

74 | 2 | null | 6 | 78 | null | In such a discussion, I always recall the famous Ken Thompson quote

>

When in doubt, use brute force.

In this case, machine learning is a salvation when the assumptions are hard to catch; or at least it is much better than guessing them wrong.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:51:34.287 | 2010-07-19T19:51:34.287 | null | null | null | null |

75 | 1 | 94 | null | 5 | 1803 | I'm using [R](http://www.r-project.org/) and the manuals on the R site are really informative. However, I'd like to see some more examples and implementations with R which can help me develop my knowledge faster. Any suggestions?

| Where can I find useful R tutorials with various implementations? | CC BY-SA 2.5 | null | 2010-07-19T19:52:31.180 | 2018-09-26T16:45:54.767 | 2018-09-26T16:45:54.767 | 7290 | 69 | [

"r",

"references"

] |

76 | 2 | null | 73 | 23 | null | I use [plyr](http://cran.r-project.org/web/packages/plyr/index.html) and [ggplot2](http://cran.r-project.org/web/packages/ggplot2/index.html) the most on a daily basis.

I also rely heavily on time series packages; most especially, the [zoo](http://cran.r-project.org/web/packages/zoo/index.html) package.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:52:49.387 | 2010-07-19T19:52:49.387 | null | null | 5 | null |

77 | 2 | null | 36 | 27 | null |

- Sometimes correlation is enough. For example, in car insurance, male drivers are correlated with more accidents, so insurance companies charge them more. There is no way you could actually test this for causation. You cannot change the genders of the drivers experimentally. Google has made hundreds of billions of dollars not caring about causation.

- To find causation, you generally need experimental data, not observational data. Though, in economics, they often use observed "shocks" to the system to test for causation, like if a CEO dies suddenly and the stock price goes up, you can assume causation.

- Correlation is a necessary but not sufficient condition for causation. To show causation requires a counter-factual.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:54:03.253 | 2010-09-09T18:16:59.853 | 2010-09-09T18:16:59.853 | 74 | 74 | null |

78 | 2 | null | 53 | 169 | null | You tend to use the covariance matrix when the variable scales are similar and the correlation matrix when variables are on different scales.

Using the correlation matrix is equivalent to standardizing each of the variables (to mean 0 and standard deviation 1). In general, PCA with and without standardizing will give different results. Especially when the scales are different.

As an example, take a look at this R `heptathlon` data set. Some of the variables have an average value of about 1.8 (the high jump), whereas other variables (run 800m) are around 120.

```

library(HSAUR)

heptathlon[,-8] # look at heptathlon data (excluding 'score' variable)

```

This outputs:

```

hurdles highjump shot run200m longjump javelin run800m

Joyner-Kersee (USA) 12.69 1.86 15.80 22.56 7.27 45.66 128.51

John (GDR) 12.85 1.80 16.23 23.65 6.71 42.56 126.12

Behmer (GDR) 13.20 1.83 14.20 23.10 6.68 44.54 124.20

Sablovskaite (URS) 13.61 1.80 15.23 23.92 6.25 42.78 132.24

Choubenkova (URS) 13.51 1.74 14.76 23.93 6.32 47.46 127.90

...

```

Now let's do PCA on covariance and on correlation:

```

# scale=T bases the PCA on the correlation matrix

hep.PC.cor = prcomp(heptathlon[,-8], scale=TRUE)

hep.PC.cov = prcomp(heptathlon[,-8], scale=FALSE)

biplot(hep.PC.cov)

biplot(hep.PC.cor)

```

[](https://i.stack.imgur.com/4IwjG.png)

Notice that PCA on covariance is dominated by `run800m` and `javelin`: PC1 is almost equal to `run800m` (and explains $82\%$ of the variance) and PC2 is almost equal to `javelin` (together they explain $97\%$). PCA on correlation is much more informative and reveals some structure in the data and relationships between variables (but note that the explained variances drop to $64\%$ and $71\%$).

Notice also that the outlying individuals (in this data set) are outliers regardless of whether the covariance or correlation matrix is used.

| null | CC BY-SA 4.0 | null | 2010-07-19T19:54:38.710 | 2018-10-10T11:27:18.523 | 2018-10-10T11:27:18.523 | 28666 | 8 | null |

79 | 2 | null | 54 | 5 | null | I am not sure this is purely a US vs. British issue. The rest of this page is excerpted from a faq I wrote.([http://www.graphpad.com/faq/viewfaq.cfm?faq=1383](http://www.graphpad.com/faq/viewfaq.cfm?faq=1383)).

How to compute the SD with n-1 in the denominator

- Compute the square of the difference between each value and the sample mean.

- Add those values up.

- Divide the sum by n-1. The result is called the variance.

- Take the square root to obtain the Standard Deviation.

Why n-1?

Why divide by n-1 rather than n when computing a standard deviation? In step 1, you compute the difference between each value and the mean of those values. You don't know the true mean of the population; all you know is the mean of your sample. Except for the rare cases where the sample mean happens to equal the population mean, the data will be closer to the sample mean than it will be to the true population mean. So the value you compute in step 2 will probably be a bit smaller (and can't be larger) than what it would be if you used the true population mean in step 1. To make up for this, divide by n-1 rather than n.v This is called Bessel's correction.

But why n-1? If you knew the sample mean, and all but one of the values, you could calculate what that last value must be. Statisticians say there are n-1 degrees of freedom.

When should the SD be computed with a denominator of n instead of n-1?

Statistics books often show two equations to compute the SD, one using n, and the other using n-1, in the denominator. Some calculators have two buttons.

The n-1 equation is used in the common situation where you are analyzing a sample of data and wish to make more general conclusions. The SD computed this way (with n-1 in the denominator) is your best guess for the value of the SD in the overall population.

If you simply want to quantify the variation in a particular set of data, and don't plan to extrapolate to make wider conclusions, then you can compute the SD using n in the denominator. The resulting SD is the SD of those particular values. It makes no sense to compute the SD this way if you want to estimate the SD of the population from which those points were drawn. It only makes sense to use n in the denominator when there is no sampling from a population, there is no desire to make general conclusions.

The goal of science is almost always to generalize, so the equation with n in the denominator should not be used. The only example I can think of where it might make sense is in quantifying the variation among exam scores. But much better would be to show a scatterplot of every score, or a frequency distribution histogram.

| null | CC BY-SA 4.0 | null | 2010-07-19T19:56:04.120 | 2020-01-13T00:55:13.780 | 2020-01-13T00:55:13.780 | 25 | 25 | null |

80 | 2 | null | 62 | 11 | null | The basic idea behind this is that football clubs have an age cut-off when determining teams. In the league my children participate in the age restrictions states that children born after July 31st are placed on the younger team. This means that two children that are effectively the same age can be playing with two different age groups. The child born July 31st will be playing on the older team and theoretically be the youngest and smallest on the team and in the league. The child born on August 1st will be the oldest and largest child in the league and will be able to benefit from that.

The survivorship bias comes because competitive leagues will select the best players for their teams. The best players in childhood are often the older players since they have additional time for their bodies to mature. This means that otherwise acceptable younger players are not selected simply because of their age. Since they are not given the same opportunities as the older kids, they don’t develop the same skills and eventually drop out of competitive soccer.

If the cut-off for competitive soccer in enough countries is January 1st, that would support the phenomena you see. A similar phenomena has been observed in several other sports including baseball and ice hockey.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:56:43.807 | 2010-07-19T19:56:43.807 | null | null | 93 | null |

81 | 2 | null | 73 | 8 | null | I use the [xtable](http://cran.r-project.org/web/packages/xtable/index.html) package. The xtable package turns tables produced by R (in particular, the tables displaying the anova results) into LaTeX tables, to be included in an article.

| null | CC BY-SA 2.5 | null | 2010-07-19T19:58:56.157 | 2010-07-19T19:58:56.157 | null | null | 69 | null |

83 | 2 | null | 75 | 2 | null | R is designed around ideas such as "reproducible research" and "trustworthy software", as John Chambers says [in his excellent book "Software for Data Analysis: Programming with R"](http://books.google.com/books?id=UXneuOIvhEAC&printsec=frontcover).

One of the best ways to learn R is to look at the wealth of source code that available on [CRAN](http://cran.r-project.org/) (with 2461 packages and counting). Simple `install.packages`, load a `library()`, and start browsing the code.

| null | CC BY-SA 2.5 | null | 2010-07-19T20:02:51.297 | 2010-07-19T20:02:51.297 | null | null | 5 | null |

84 | 2 | null | 44 | 6 | null | I would explain it to a layman as:

>

Data visualization is taking data, and making a picture out of it. This allows you to easily see and understand relationships within the data much more easily than just looking at the numbers.

| null | CC BY-SA 2.5 | null | 2010-07-19T20:03:34.307 | 2010-07-19T20:03:34.307 | null | null | 41 | null |

85 | 2 | null | 50 | 48 | null | A random variable is a variable whose value depends on unknown events. We can summarize the unknown events as "state", and then the random variable is a function of the state.

Example:

Suppose we have three dice rolls ($D_{1}$,$D_{2}$,$D_{3}$). Then the state $S=(D_{1},D_{2},D_{3})$.

- One random variable $X$ is the number of 5s. This is:

$$ X=(D_{1}=5?)+(D_{2}=5?)+(D_{3}=5?)$$

- Another random variable $Y$ is the sum of the dice rolls. This is:

$$ Y=D_{1}+D_{2}+D_{3} $$

| null | CC BY-SA 3.0 | null | 2010-07-19T20:08:00.927 | 2016-09-19T08:54:59.927 | 2016-09-19T08:54:59.927 | 35989 | 87 | null |

86 | 2 | null | 50 | 17 | null | Unlike a regular variable, a random variable may not be substituted for a single, unchanging value. Rather statistical properties such as the distribution of the random variable may be stated. The distribution is a function that provides the probability the variable will take on a given value, or fall within a range given certain parameters such as the mean or standard deviation.

Random variables may be classified as discrete if the distribution describes values from a countable set, such as the integers. The other classification for a random variable is continuous and is used if the distribution covers values from an uncountable set such as the real numbers.

| null | CC BY-SA 2.5 | null | 2010-07-19T20:08:37.010 | 2010-07-20T17:46:55.840 | 2010-07-20T17:46:55.840 | 13 | 13 | null |

89 | 2 | null | 44 | 7 | null | When I teach very basic statistics to Secondary School Students I talk about evolution and how we have evolved to spot patterns in pictures rather than lists of numbers and that data visualisation is one of the techniques we use to take advantage of this fact.

Plus I try to talk about recent news stories where statistical insight contradicts what the press is implying, making use of sites like [Gapminder](http://www.gapminder.org/) to find the representation before choosing the story.

| null | CC BY-SA 2.5 | null | 2010-07-19T20:11:47.797 | 2010-07-19T20:11:47.797 | null | null | 55 | null |

90 | 2 | null | 75 | 4 | null | [R bloggers](http://www.r-bloggers.com/) has been steadily supplying me with a lot of good pragmatic content.

From the author:

```

R-Bloggers.com is a central hub (e.g: A blog aggregator) of content

collected from bloggers who write about R (in English).

The site will help R bloggers and users to connect and follow

the “R blogosphere”.

```

| null | CC BY-SA 2.5 | null | 2010-07-19T20:12:24.130 | 2010-07-19T20:12:24.130 | null | null | 22 | null |

91 | 2 | null | 23 | 26 | null | As user28 said in comments above, the pdf is the first derivative of the cdf for a continuous random variable, and the difference for a discrete random variable.

In the continuous case, wherever the cdf has a discontinuity the pdf has an atom. Dirac delta "functions" can be used to represent these atoms.

| null | CC BY-SA 2.5 | null | 2010-07-19T20:15:54.823 | 2010-07-20T08:52:27.083 | 2010-07-20T08:52:27.083 | 87 | 87 | null |

93 | 1 | null | null | 7 | 481 | We're trying to use a Gaussian process to model h(t) -- the hazard function -- for a very small initial population, and then fit that using the available data. While this gives us nice plots for credible sets for h(t) and so on, it unfortunately is also just pushing the inference problem from h(t) to the covariance function of our process. Perhaps predictably, we have several reasonable and equally defensible guesses for this that all produce different result.

Has anyone run across any good approaches for addressing such a problem? Gaussian-process related or otherwise?

| Robust nonparametric estimation of hazard/survival functions based on low count data | CC BY-SA 2.5 | null | 2010-07-19T20:17:07.643 | 2010-09-16T12:33:50.390 | 2010-09-16T12:33:50.390 | null | 61 | [

"nonparametric",

"survival",

"hazard"

] |

94 | 2 | null | 75 | 5 | null | Quick R site is basic, but quite nice for start [http://www.statmethods.net/index.html](http://www.statmethods.net/index.html) .

| null | CC BY-SA 2.5 | null | 2010-07-19T20:18:24.520 | 2010-07-19T20:18:24.520 | null | null | null | null |

95 | 1 | null | null | 7 | 745 | I have been using various GARCH-based models to forecast volatility for various North American equities using historical daily data as inputs.

Asymmetric GARCH models are often cited as a modification of the basic GARCH model to account for the 'leverage effect' i.e. volatility tends to increase more after a negative return than a similarly sized positive return.

What kind of a difference would you expect to see between a standard GARCH and an asymmetric GARCH forecast for a broad-based equity index like the S&P 500 or the NASDAQ-100?

There is nothing particularly special about these two indices, but I think it is helpful to give something concrete to focus the discussion, as I am sure the effect would be different depending on the equities used.

| How Large a Difference Can Be Expected Between Standard GARCH and Asymmetric GARCH Volatility Forecasts? | CC BY-SA 2.5 | null | 2010-07-19T20:21:35.683 | 2012-07-26T19:47:59.577 | 2010-07-20T06:14:40.363 | 154 | 57 | [

"time-series",

"garch",

"volatility-forecasting",

"finance"

] |

96 | 2 | null | 75 | 4 | null | Another great resource is [the LearnR blog](http://learnr.wordpress.com/), which went through an extensive study of visualizations with lattice and ggplot2.

| null | CC BY-SA 2.5 | null | 2010-07-19T20:22:44.807 | 2010-07-19T20:22:44.807 | null | null | 5 | null |

97 | 1 | 104 | null | 69 | 68448 | I have some [ordinal data](http://en.wikipedia.org/wiki/Ordinal_scale#Ordinal_scale) gained from survey questions. In my case they are [Likert style](http://en.wikipedia.org/wiki/Likert_scale) responses (Strongly Disagree-Disagree-Neutral-Agree-Strongly Agree). In my data they are coded as 1-5.

I don't think means would mean much here, so what basic summary statistics are considered usefull?

| What are good basic statistics to use for ordinal data? | CC BY-SA 2.5 | null | 2010-07-19T20:23:22.603 | 2021-04-04T16:52:54.747 | 2021-04-04T16:52:54.747 | 11887 | 114 | [

"descriptive-statistics",

"ordinal-data",

"likert",

"faq"

] |

98 | 2 | null | 1 | 20 | null | Eliciting priors is a tricky business.

[Statistical Methods for Eliciting Probability Distributions](http://www.stat.cmu.edu/tr/tr808/tr808.pdf) and [Eliciting Probability Distributions](http://www.jeremy-oakley.staff.shef.ac.uk/Oakley_elicitation.pdf) are quite good practical guides for prior elicitation. The process in both papers is outlined as follows:

- background and preparation;

- identifying and recruiting the expert(s);

- motivation and training the expert(s);

- structuring and decomposition (typically deciding precisely what variables should

be elicited, and how to elicit joint distributions in the multivariate case);

- the elicitation itself.

Of course, they also review how the elicitation results in information that may be fit to or otherwise define distributions (for instance, in the Bayesian context, [Beta distributions](http://en.wikipedia.org/wiki/Beta_distribution)), but quite importantly, they also address common pitfalls in modeling expert knowledge (anchoring, narrow and small-tailed distributions, etc.).

| null | CC BY-SA 2.5 | null | 2010-07-19T20:23:57.330 | 2010-07-19T20:56:56.330 | 2010-07-19T20:56:56.330 | 39 | 39 | null |

99 | 2 | null | 73 | 8 | null | [multicore](http://cran.r-project.org/web/packages/multicore/index.html) is quite nice for tool for making faster scripts faster.

[cacheSweave](http://cran.r-project.org/web/packages/cacheSweave/index.html) saves a lot of time when using `Sweave`.

| null | CC BY-SA 2.5 | null | 2010-07-19T20:25:08.793 | 2010-07-19T20:25:08.793 | null | null | null | null |

100 | 1 | 110 | null | 7 | 9712 | I'd like to see the answer with qualitative view on the problem, not just definition. Examples and analogous from other areas of applied math also would be good.

I understand, my question is silly, but I can't find good and intuitive introduction textbook on signal processing — if someone would suggest one, I will be happy.

| What's the purpose of window function in spectral analysis? | CC BY-SA 3.0 | null | 2010-07-19T20:30:23.320 | 2018-02-19T08:31:21.727 | 2018-02-19T08:31:21.727 | 128677 | 117 | [

"signal-processing",

"spectral-analysis"

] |

101 | 2 | null | 31 | 189 | null |

## Understanding $p$-value

Suppose, that you want to test the hypothesis that the average height of male students at your University is $5$ ft $7$ inches. You collect heights of $100$ students selected at random and compute the sample mean (say it turns out to be $5$ ft $9$ inches). Using an appropriate formula/statistical routine you compute the $p$-value for your hypothesis and say it turns out to be $0.06$.

In order to interpret $p=0.06$ appropriately, we should keep several things in mind:

- The first step under classical hypothesis testing is the assumption that the hypothesis under consideration is true. (In our context, we assume that the true average height is $5$ ft $7$ inches.)

- Imagine doing the following calculation: Compute the probability that the sample mean is greater than $5$ ft $9$ inches assuming that our hypothesis is in fact correct (see point 1).

In other words, we want to know $$\mathrm{P}(\mathrm{Sample\: mean} \ge 5 \:\mathrm{ft} \:9 \:\mathrm{inches} \:|\: \mathrm{True\: value} = 5 \:\mathrm{ft}\: 7\: \mathrm{inches}).$$

The calculation in step 2 is what is called the $p$-value. Therefore, a $p$-value of $0.06$ would mean that if we were to repeat our experiment many, many times (each time we select $100$ students at random and compute the sample mean) then $6$ times out of $100$ we can expect to see a sample mean greater than or equal to $5$ ft $9$ inches.

Given the above understanding, should we still retain our assumption that our hypothesis is true (see step 1)? Well, a $p=0.06$ indicates that one of two things have happened:

- (A) Either our hypothesis is correct and an extremely unlikely event has occurred (e.g., all $100$ students are student athletes)

or

- (B) Our assumption is incorrect and the sample we have obtained is not that unusual.

The traditional way to choose between (A) and (B) is to choose an arbitrary cut-off for $p$. We choose (A) if $p > 0.05$ and (B) if $p < 0.05$.

| null | CC BY-SA 3.0 | null | 2010-07-19T20:32:08.183 | 2015-09-14T16:14:44.860 | 2020-06-11T14:32:37.003 | -1 | null | null |

102 | 2 | null | 44 | 2 | null | For me [Illuminating the Path](http://nvac.pnl.gov/agenda.stm) report has been always good point of reference.

For more recent overview you can also have a look at good [article](http://queue.acm.org/detail.cfm?id=1805128) by Heer and colleagues.

But what would explain better than visualization itself?

([Source](http://blog.ffctn.com/what-is-data-visualization))

| null | CC BY-SA 2.5 | null | 2010-07-19T20:32:14.283 | 2010-11-23T20:42:01.350 | 2010-11-23T20:42:01.350 | 22 | 22 | null |

103 | 1 | null | null | 50 | 10817 | What is the best blog on data visualization?

I'm making this question a community wiki since it is highly subjective. Please limit each answer to one link.

---

Please note the following criteria for proposed answers:

>

[A]cceptable answers to questions like this ...need to supply adequate descriptions and reasoned justification. A mere hyperlink doesn't do it. ...[A]ny future replies [must] meet ...[these] standards; otherwise, they will be deleted without further comment.

| What is your favorite data visualization blog? | CC BY-SA 3.0 | null | 2010-07-19T20:33:26.713 | 2022-11-29T16:37:00.783 | 2016-10-13T15:18:16.230 | 28666 | 5 | [

"data-visualization",

"references"

] |

104 | 2 | null | 97 | 29 | null | A frequency table is a good place to start. You can do the count, and relative frequency for each level. Also, the total count, and number of missing values may be of use.

You can also use a contingency table to compare two variables at once. Can display using a mosaic plot too.

| null | CC BY-SA 2.5 | null | 2010-07-19T20:34:46.820 | 2010-07-19T20:34:46.820 | null | null | 74 | null |

105 | 2 | null | 103 | 19 | null | [FlowingData](http://flowingdata.com/) | Data Visualization, Infographics, and Statistics

| null | CC BY-SA 3.0 | null | 2010-07-19T20:35:34.510 | 2012-10-24T14:50:25.800 | 2012-10-24T14:50:25.800 | 615 | 46 | null |

107 | 2 | null | 103 | 11 | null | [information aesthetics](https://web.archive.org/web/20120101181841/http://infosthetics.com/) - Data Visualization & Information Design

| null | CC BY-SA 4.0 | null | 2010-07-19T20:36:01.200 | 2022-11-29T16:27:06.847 | 2022-11-29T16:27:06.847 | 362671 | 46 | null |

108 | 2 | null | 103 | 17 | null | [Information Is Beautiful](https://informationisbeautiful.net/) | Ideas, issues, knowledge, data - visualized!

| null | CC BY-SA 4.0 | null | 2010-07-19T20:36:47.800 | 2022-11-29T16:27:52.770 | 2022-11-29T16:27:52.770 | 362671 | 5 | null |

109 | 1 | null | null | 5 | 11443 | Following one-way ANOVA, there are many possible follow-up multiple comparison tests. Holm's test (or better, the Holm-Sidak) test has lots of power, but because it works in a stepwise manner, it cannot compute confidence intervals. Its advantage over the tests than can compute confidence intervals (Tukey, Dunnett) is that is has more power. But is it fair to say that the Holm method always has more power than the methods of Tukey and Dunnet? Or does it depend...?

| Power of Holm's multiple comparison testing compared to others | CC BY-SA 2.5 | null | 2010-07-19T20:37:21.667 | 2010-08-04T14:38:22.450 | null | null | 25 | [

"multiple-comparisons",

"statistical-power"

] |

110 | 2 | null | 100 | 5 | null | It depends on where you apply the window function. If you do it in the time domain, it's because you only want to analyze the periodic behavior of the function in a short duration. You do this when you don't believe that your data is from a stationary process.

If you do it in the frequency domain, then you do it to isolate a specific set of frequencies for further analysis; you do this when you believe that (for instance) high-frequency components are spurious.

The first three chapters of "A Wavelet Tour of Signal Processing" by Stephane Mallat have an excellent introduction to signal processing in general, and chapter 4 goes into a very good discussion of windowing and time-frequency representations in both continuous and discrete time, along with a few worked-out examples.

| null | CC BY-SA 2.5 | null | 2010-07-19T20:40:36.573 | 2010-07-19T20:40:36.573 | null | null | 61 | null |

111 | 2 | null | 40 | 11 | null | In R, the default setting for random number generation are:

- For U(0,1), use the Mersenne-Twister algorithm

- For Guassian numbers use the numerical inversion of the standard normal distribution function.

You can easily check this, viz.

```

> RNGkind()

[1] "Mersenne-Twister" "Inversion"

```

It is possible to change the default generator to other PRNGs, such as Super-Duper,Wichmann-Hill, Marsaglia-Multicarry or even a user-supplied PRNG. See the ?RNGkind for further details. I have never needed to change the default PRNG.

The [C GSL](http://www.gnu.org/software/gsl/manual/html_node/Random-number-environment-variables.html) library also uses the [Mersenne-Twister](http://www.gnu.org/software/gsl/manual/html_node/Random-number-generator-algorithms.html) by default.

| null | CC BY-SA 2.5 | null | 2010-07-19T20:41:24.917 | 2010-07-19T20:41:24.917 | null | null | 8 | null |

112 | 2 | null | 103 | 2 | null | I see all my favorite blogs have been listed. So I'll give you this one:

[I Love Charts](http://ilovecharts.tumblr.com/)

It's a bit light hearted.

| null | CC BY-SA 3.0 | null | 2010-07-19T20:52:27.263 | 2012-10-24T14:59:02.753 | 2012-10-24T14:59:02.753 | 615 | 12 | null |